Text Solution

Verified by Experts

Recommended Questions

- A 50 MHz sky wave takes 4.04 ms to reach a receiver via retransmission...

Text Solution

|

- In a line of sight radio communication, a distance of about 50 is Kept...

Text Solution

|

- A 50 MHz sky wave sky wave takes 4.04 ms to reach a receiver via re-tr...

Text Solution

|

- किसी भवन के शीर्ष पर लगे संप्रेषण ऐंटेना की ऊँचाई 64 m तथा ग्राही ऐंटे...

Text Solution

|

- (A) LOS संचार में प्रेषित ऐन्टेना की ऊँचाई 50 मीटर तथा अभिग्राही ऐन्ट...

Text Solution

|

- A 50MHz sky wave takes 4.04 ms to reach a receiver via retransmission ...

Text Solution

|

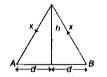

- If h(T) and h(R) are the height of the transmitting and receiving ante...

Text Solution

|

- A transmitting antenna has a height 20 m. What will be the height of r...

Text Solution

|

- The height of a transmission antenna is 49m and that of the receiving ...

Text Solution

|